While working, I had the opportunity to join a task force related to machine learning and deep learning. Although I had some interest in the field, I lacked in-depth knowledge. I often came across terms I wasn't familiar with, so I decided to take this opportunity to organize and understand them one by one.

The first terms I will cover are Precision, Recall, and Accuracy—common evaluation metrics used when working with machine learning or deep learning models, especially in pattern recognition and classification tasks.

Understanding Precision, Recall, and Accuracy with an Example

Before diving into the definitions, let's consider a simple example:

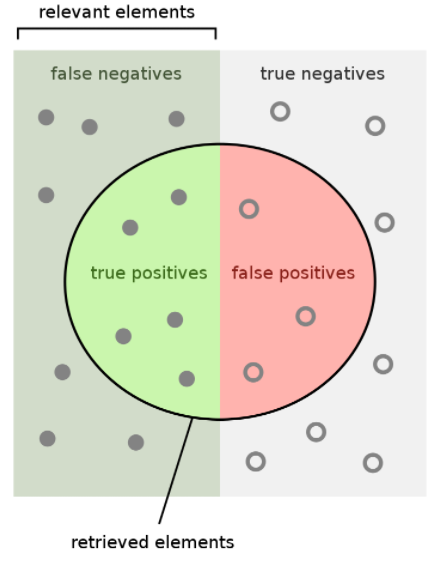

Imagine we have an AI model that classifies a coin as either heads or tails. The model’s predictions can be categorized into four cases:

- True Positive (TP): The actual coin is heads, and the AI correctly predicts heads.

- False Positive (FP): The actual coin is tails, but the AI incorrectly predicts heads.

- False Negative (FN): The actual coin is heads, but the AI incorrectly predicts tails.

- True Negative (TN): The actual coin is tails, and the AI correctly predicts tails.

Key Concepts:

- If the model's prediction matches the actual value (ground truth), it is classified as True; otherwise, it is False.

- If the model predicts the positive class (e.g., heads), it is labeled as Positive; if it predicts the negative class (e.g., tails), it is labeled as Negative.

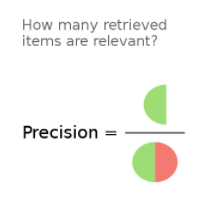

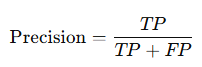

1) Precision (Positive Predictive Value)

So, where does Precision fit in the TP/FP/FN/TN framework?

Precision measures how many of the model’s positive predictions are actually correct. In other words, it is the ratio of correctly predicted positives (TP) to all predicted positives (TP + FP).

A high Precision score means that when the model predicts a positive result, it is often correct. However, if the model misses many actual positives (high FN), it cannot be considered reliable. This is why Recall must also be considered.

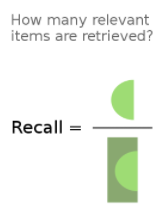

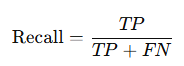

2) Recall (Sensitivity, True Positive Rate)

Recall measures how many actual positive cases the model correctly identifies. In other words, it is the ratio of correctly predicted positives (TP) to all actual positives (TP + FN).

Both Precision and Recall are commonly used metrics in classification models to evaluate their performance.

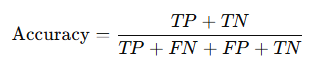

3) Accuracy

Accuracy measures how many predictions (both positive and negative) are correct out of all predictions made. It is calculated as follows:

Unlike Precision and Recall, which focus only on TP, Accuracy considers TN (correctly predicted negatives) as well, providing a more general evaluation of model performance.

Conclusion

- Precision is useful when false positives are costly (e.g., spam detection).

- Recall is important when missing a true positive is critical (e.g., medical diagnoses).

- Accuracy gives a general idea of overall model correctness but can be misleading if the dataset is imbalanced.

By understanding these metrics, you can better evaluate and improve machine learning models!

References

- Wikipedia: Precision & Recall

Thank you for reading! 🚀

'프로그래밍 > 머신러닝&딥러닝' 카테고리의 다른 글

| What is a Perceptron? (0) | 2025.03.21 |

|---|---|

| Epoch, Batch, and Iteration in Deep Learning (0) | 2025.03.20 |

| What Is Data Annotation (Labeling)? (0) | 2025.03.13 |

| 퍼셉트론(Perceptron)이란 무엇인가? (0) | 2024.11.21 |

| Epoch, batch, iteration (0) | 2024.03.24 |