When studying deep learning, you often come across terms like epoch, batch, and iteration. These terms are essential for understanding how neural networks learn from data. In this post, let's clarify these concepts in a simple and intuitive way.

What is an Epoch?

An epoch in deep learning refers to one complete pass through the entire dataset during training.

For example, if we set 128 epochs, it means that the neural network will train on the entire dataset 128 times.

One training cycle = one forward pass + one backward pass

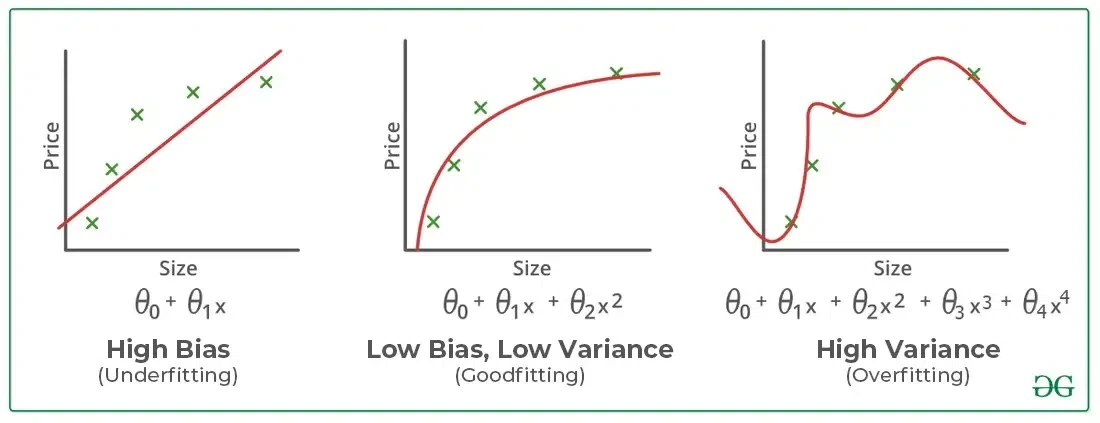

However, training for too many epochs can lead to overfitting, while too few epochs can cause underfitting. It’s crucial to find the right number of epochs to achieve an optimal model.

What is Batch Size?

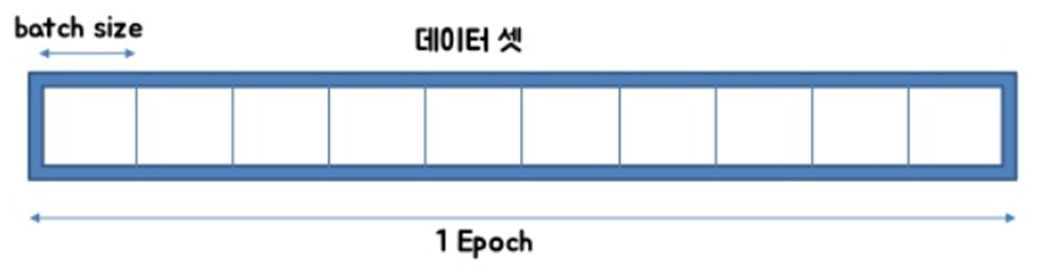

A batch refers to a subset of the dataset used for training.

If a dataset contains 1000 data points, setting a batch size of 100 means the model will train using 100 samples at a time, instead of processing all 1000 at once.

Batch size is essential because loading the entire dataset into memory may be infeasible for large datasets. By breaking it into smaller batches, we make the training more efficient.

What is an Iteration?

An iteration is one update of the model's weights using a batch of data.

Since processing the entire dataset at once is impractical, we split it into batches. During training, each batch is processed separately, and the model updates its weights accordingly.

Example Breakdown

Let's say we have:

- Dataset size = 500 samples

- Epochs = 20

- Batch size = 50

Since each batch contains 50 samples, it takes 10 iterations to process the entire dataset once (500 / 50 = 10 iterations per epoch).

- Total number of updates (iterations) = Epochs × Iterations per epoch = 20 × 10 = 200 iterations

Summary

- Epoch: One full pass through the entire dataset.

- Batch: A subset of the dataset used in training.

- Iteration: A single batch update of the model’s weights.

Understanding these terms is crucial for tuning your deep learning models effectively. Hope this helps clarify the concepts!

References

'프로그래밍 > 머신러닝&딥러닝' 카테고리의 다른 글

| What is a Perceptron? (0) | 2025.03.21 |

|---|---|

| (Terminology) Precision, Recall, and Accuracy (0) | 2025.03.16 |

| What Is Data Annotation (Labeling)? (0) | 2025.03.13 |

| 퍼셉트론(Perceptron)이란 무엇인가? (0) | 2024.11.21 |

| Epoch, batch, iteration (0) | 2024.03.24 |